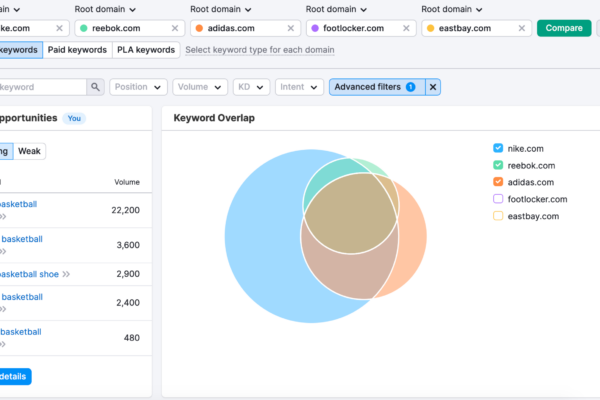

A Step-by-Step Guide to Competitor Research on Semrush

The #1 search result on Google SERP gets nearly 32% of all the clicks! Yes, the number is great and lucrative enough to attract any marketer’s attention. However, the race to the top is never easy! While a large number of businesses focus on SEO, the top ranking can obviously only be achieved by one […]